About Me

Here is Yong Dai.

Brief Bio

As a dedicated AI researcher, my work bridges the gap between large language models and the complex, multi-modal world we live in. My journey began at the University of Electronic Science and Technology of China (UESTC), where I completed my Ph.D. under the guidance of Professor Zenglin Xu. During my doctoral program, I also had the valuable opportunity to learn and grow as a research intern at Microsoft. This experience, combined with my subsequent role as a researcher at Tencent AI Lab, solidified my expertise in harnessing large-scale models for a wide spectrum of downstream tasks.

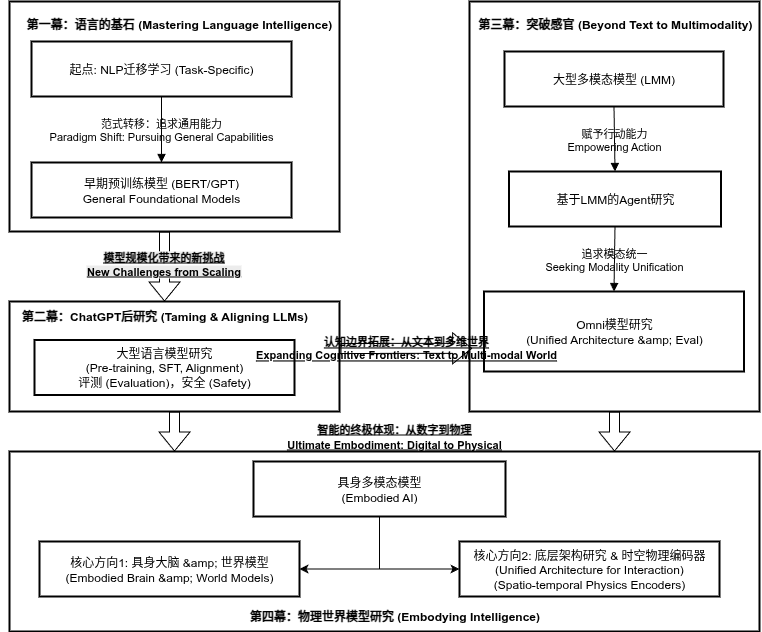

Recently, my focus has shifted to what I see as the next frontier: multi-modality and web agents. I am deeply fascinated by the pursuit of a unified paradigm—a single, elegant model that can perceive, reason, and create across text, images, and other data formats. My long-term vision is to contribute to the development of a true “World Model.” By integrating such a powerful generative and understanding system with autonomous agent technology, I aim to play a part in building the foundation for Artificial General Intelligence (AGI) and creating systems that can truly benefit humanity.

Experience

- 06/21 - 04/24: Research Intern and Researcher, Tencent AI Lab

- 12/20 - 04/21: Visiting student, Westlake University

- 10/19 - 10/20: Research Intern, Microsoft STCA nlpg

- 10/18 - 08/19: Project leader, cooperation project with Nuance

Research Interests

News

| Year | Content |

|——|———|

| ![]() Feb 2025 | 🎉 Two papers accepted to ACM MM 2025 |

|

Feb 2025 | 🎉 Two papers accepted to ACM MM 2025 |

| ![]() Sep 2024 | 🎉 Two papers accepted to NeurIPS 2024 |

|

Sep 2024 | 🎉 Two papers accepted to NeurIPS 2024 |

| ![]() Sep 2024 | 🎉 One paper accepted to EMNLP 2024 Findings |

|

Sep 2024 | 🎉 One paper accepted to EMNLP 2024 Findings |

| ![]() Jun 2024 | 🎉 Three papers accepted to ACL 2024 |

|

Jun 2024 | 🎉 Three papers accepted to ACL 2024 |

| ![]() Jun 2023 | 🎉 Paper SkillNet-X accepted to ICASSP 2024 |

|

Jun 2023 | 🎉 Paper SkillNet-X accepted to ICASSP 2024 |

| ![]() Dec 2022 | 🎉 Paper Federated Learning + PLMs accepted to Findings of ACL 2023 |

|

Dec 2022 | 🎉 Paper Federated Learning + PLMs accepted to Findings of ACL 2023 |

| ![]() Oct 2022 | 🎉 Paper Prompt-based Constrained Clustering accepted to Findings of EMNLP 2022 |

|

Oct 2022 | 🎉 Paper Prompt-based Constrained Clustering accepted to Findings of EMNLP 2022 |

| ![]() Mar 2022 | 🎉 Paper Whole Word Masking accepted to Findings of ACL 2022 |

|

Mar 2022 | 🎉 Paper Whole Word Masking accepted to Findings of ACL 2022 |

| ![]() Mar 2022 | 🎉 Paper Chinese GPT for Pinyin Input accepted to ACL 2022 |

|

Mar 2022 | 🎉 Paper Chinese GPT for Pinyin Input accepted to ACL 2022 |

| ![]() Jan 2022 | 🎉 Paper Graph Fusion Network accepted to KBS |

|

Jan 2022 | 🎉 Paper Graph Fusion Network accepted to KBS |

| ![]() Sep 2021 | 🎉 Paper Unsupervised Sentiment Analysis accepted to CC |

|

Sep 2021 | 🎉 Paper Unsupervised Sentiment Analysis accepted to CC |

| ![]() Oct 2020 | 🎉 Paper Contextualize KBs with Transformer accepted to EMNLP 2021 (Oral) |

|

Oct 2020 | 🎉 Paper Contextualize KBs with Transformer accepted to EMNLP 2021 (Oral) |

| ![]() Apr 2020 | 🎉 Paper Adversarial Training for Sentiment Analysis accepted to AAAI 2020 |

Apr 2020 | 🎉 Paper Adversarial Training for Sentiment Analysis accepted to AAAI 2020 |